Normalize AI text before publishing

Normalizing AI-generated text is the step that turns unstable output into publishing-ready content. AI text often looks correct during editing, then behaves unpredictably once pasted into a CMS, social platform, or mobile app. Wrapping fails, truncation triggers too early, and parsing becomes inconsistent across platforms.

These failures are caused by invisible Unicode artifacts carried through rendering layers and copy-paste workflows. Normalization removes that hidden structure before publishing, so the visible text behaves consistently everywhere it is used.

Normalization is defined, its role in AI workflows is positioned, and its effects are mapped to real publishing failures (wrapping refusal, broken hashtags, early truncation). A repeatable normalization step is presented for workflows where predictable behavior matters more than preserving invisible formatting rules.

What normalization means

Normalization is the process of standardizing the structural layer of text before publishing. It removes unintended invisible Unicode artifacts while preserving meaning, emoji integrity, and multilingual shaping. Unlike rewriting or paraphrasing, normalization operates below the language layer.

In AI workflows, normalization targets artifacts such as non-breaking spaces that block wrapping, zero-width characters that split tokens invisibly, and hidden formatting residue introduced during rendering and clipboard transport.

Why normalization is critical for AI workflows

AI-generated text is rarely written directly into the destination editor. It is generated elsewhere, rendered for readability, and then pasted. Each step adds a layer where invisible structure can persist. Without normalization, that structure reaches platforms that enforce strict layout and parsing rules.

Normalization collapses hidden variability into a predictable form. Once applied, the same text behaves the same way across platforms, devices, and editors.

Normalization vs detection

Detecting invisible Unicode manually is unreliable and slow. Editors hide complexity by design, and find-and-replace cannot target invisible characters. Normalization replaces detection with prevention by standardizing text before issues surface.

Symptoms that normalization resolves

Normalization directly addresses the most common AI publishing failures. Headings and captions regain flexible wrapping. Hashtags and mentions become reliably parsable. Truncation thresholds behave consistently on mobile. Selection and cursor behavior stabilizes.

These improvements are not cosmetic. They remove entire classes of platform-specific bugs that otherwise require manual debugging after publishing.

When to normalize AI text

The optimal moment to normalize AI-generated text is after editing and before publishing. At this point, the wording is final, but the text has not yet entered a platform that will enforce layout and parsing constraints.

Normalizing earlier can interfere with editing. Normalizing later means the damage is already done. Placing normalization just before publishing preserves intent while preventing downstream failures.

How safe normalization works

Safe normalization removes only unintended structure. Required Unicode for emoji sequences (ZWJ), multilingual scripts, and legitimate formatting contexts is preserved. Non-breaking spaces are replaced where flexible wrapping is required. Zero-width boundaries are removed where they interfere with tokenization.

This selective approach is what differentiates normalization from destructive cleanup. The goal is behavioral stability, not typographic flattening.

Normalization in a publishing workflow

In practice, normalization becomes a hygiene layer between AI tools and real platforms. Content is generated, edited, normalized, then published. Once this step is adopted, AI text behaves like any other clean text across platforms.

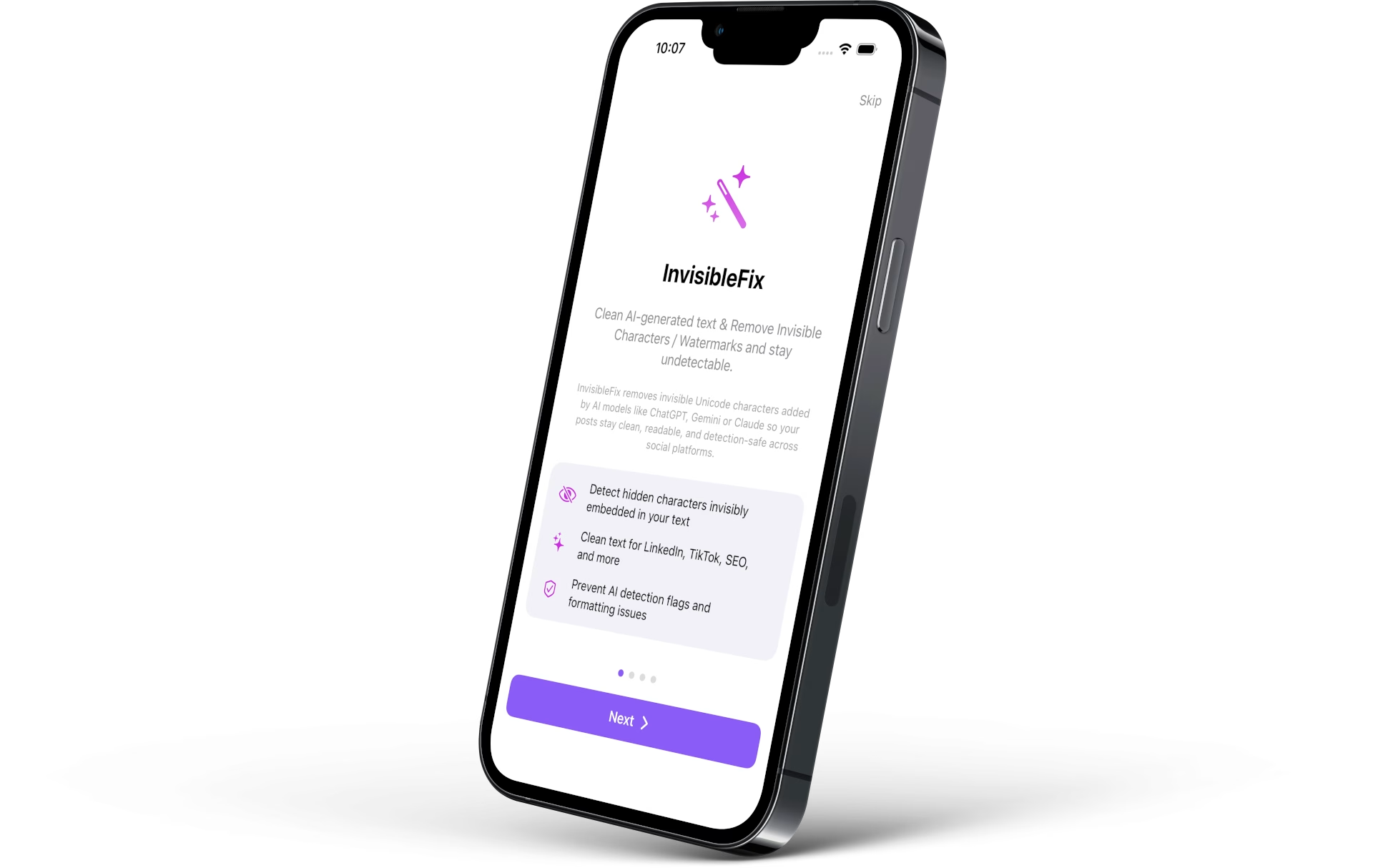

Normalization workflows are introduced in Clean AI-generated text. For immediate use, normalization can be applied locally using app.invisiblefix.app, which removes invisible artifacts without transmitting drafts externally.

Normalization does not change what AI text says. It changes how reliably that text behaves once it leaves the editor.